Difference between revisions of "CISC849 S2018 HW2"

(Created page with "''Due Friday, March 23, midnight''<br> ==Description== NOTE: YOU MAY WORK ALONE OR IN TEAMS OF TWO Continuing the theme from HW #1, this assignment is a ''classification ch...") |

(→Description) |

||

| (18 intermediate revisions by the same user not shown) | |||

| Line 5: | Line 5: | ||

NOTE: YOU MAY WORK ALONE OR IN TEAMS OF TWO | NOTE: YOU MAY WORK ALONE OR IN TEAMS OF TWO | ||

| − | Continuing the theme from HW #1, this assignment is a ''classification challenge''. You will use the [http://rgbd-dataset.cs.washington.edu/dataset.html UW RGB-D Object Dataset]. | + | Continuing the theme from HW #1, this assignment is a ''classification challenge''. You will use the [http://rgbd-dataset.cs.washington.edu/dataset.html UW RGB-D Object Dataset] ([http://rgbd-dataset.cs.washington.edu/dataset/rgbd-dataset/ here is the specific subset] we will use), which was introduced in this [https://homes.cs.washington.edu/~xren/publication/lai_icra11_rgbd_dataset.pdf ICRA 2011 paper]. Example images are shown below: |

| + | [[Image:Rgbd dataset gallery.png|800px]] | ||

| + | Images are generally small -- in the range of ~50 x 50 to ~100 x 100. | ||

| − | + | There are 51 object categories with 300 total instances (e.g., 5 examples of ''apple'', 6 examples of ''pliers'', and so on). We will use a subset of 26 of these object categories, which may be arranged as shown below in 4 main groups or ''subtrees'': ''fruit'', ''vegetable'', ''device'', and ''container''. This means there are 25 other object categories in the dataset that WILL NOT BE USED. | |

| − | |||

| − | + | [[Image:Rgbd dataset tree.png|800px]] | |

| + | |||

| + | Each object instance was photographed on a turntable with an RGB-D camera; hence there are multiple views of each instance both as color and depth images. | ||

| + | The naming scheme for the files generated is given [http://rgbd-dataset.cs.washington.edu/dataset/rgbd-dataset/README.txt here], but you will focus on the color and/or depth images. For example, <tt>toothpaste_2_1_190_crop.png</tt> is the 190th RGB frame of the 1st video sequence of the 2nd instance of a ''toothpaste'' object, and <tt>toothpaste_2_1_190_depthcrop.png</tt> is the corresponding depth image of the same toothpaste instance from the same angle. There are a ''lot'' of images, and you should not need to use every one for training. Note that in the paper, the authors only used 1 out of every 5 video frames for training. | ||

==Tasks== | ==Tasks== | ||

| − | + | Your challenge will be to train a convolutional neural network (CNN or "convnet") in TensorFlow to categorize a given RGB and/or depth image into '''1 of the 4 subtrees above''' -- '''NOT''' one of the 51 categories. You may use any TensorFlow-based convnet classification architecture that you wish, starting from random or pre-trained weights -- your choice. There are several places to possibly start from (you don't ''have'' to use any of them): | |

| − | + | ||

| − | + | * Work through the Tensorflow tutorial on [https://www.tensorflow.org/tutorials/layers using CNNs for the MNIST dataset] and then adapt the RGB-D data to be read in by the same network for training. Note that MNIST images are 28 x 28 and grayscale, so your input images would also have to be the same dimenions in order to work with the given network. | |

| − | + | * Work through this [https://www.tensorflow.org/tutorials/deep_cnn CIFAR-10 training tutorial] and then try to format the RGB-D images so that they can be read in and trained on by the same network (i.e., become 32 x 32 x 3 and have the appropriate directory structure and label files) | |

| + | * Format the RGB-D images into four subtree directories such that you can fine-tune an existing Inception network on them [https://www.tensorflow.org/tutorials/image_retraining as described here] | ||

| + | |||

| + | There are two main guidelines: | ||

| − | + | * Instance 1 of every object category (e.g., <tt>toothpaste_1</tt>, <tt>apple_1</tt>, etc.) may NOT be used for training (weight learning) or validation (hyperparameter tuning). Rather, it must be used only for testing (aka evaluation) of your final classifier(s) | |

| + | * If you have limited time, at least learn how to classify the RGB images (color). Otherwise, also try to learn how to classify the depth images (pure shape) alone and present a comparison of how the two approaches worked. | ||

| − | Please submit | + | Please submit a 2-page WRITE-UP of your approach and results. What network did you use (citation + link to GitHub or tutorial page you took code from), what modifications (if any) did you make to it, how did you conduct training (how many epochs, how did the loss change, what was the training accuracy), how did you augment or alter the training dataset, and what accuracies were you able to get for classification tasks. Add notes about any issues you encountered or interesting observations you made. |

Latest revision as of 22:19, 14 March 2018

Due Friday, March 23, midnight

Description

NOTE: YOU MAY WORK ALONE OR IN TEAMS OF TWO

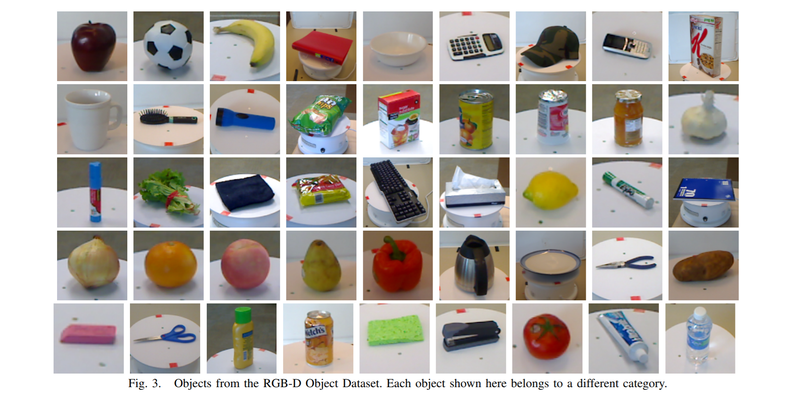

Continuing the theme from HW #1, this assignment is a classification challenge. You will use the UW RGB-D Object Dataset (here is the specific subset we will use), which was introduced in this ICRA 2011 paper. Example images are shown below:

Images are generally small -- in the range of ~50 x 50 to ~100 x 100.

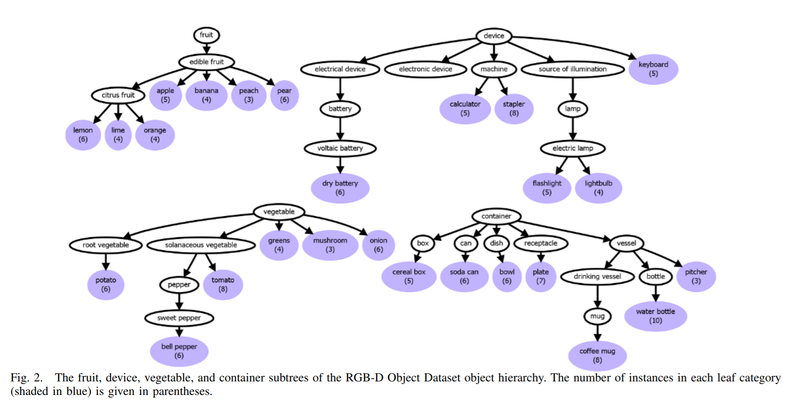

There are 51 object categories with 300 total instances (e.g., 5 examples of apple, 6 examples of pliers, and so on). We will use a subset of 26 of these object categories, which may be arranged as shown below in 4 main groups or subtrees: fruit, vegetable, device, and container. This means there are 25 other object categories in the dataset that WILL NOT BE USED.

Each object instance was photographed on a turntable with an RGB-D camera; hence there are multiple views of each instance both as color and depth images. The naming scheme for the files generated is given here, but you will focus on the color and/or depth images. For example, toothpaste_2_1_190_crop.png is the 190th RGB frame of the 1st video sequence of the 2nd instance of a toothpaste object, and toothpaste_2_1_190_depthcrop.png is the corresponding depth image of the same toothpaste instance from the same angle. There are a lot of images, and you should not need to use every one for training. Note that in the paper, the authors only used 1 out of every 5 video frames for training.

Tasks

Your challenge will be to train a convolutional neural network (CNN or "convnet") in TensorFlow to categorize a given RGB and/or depth image into 1 of the 4 subtrees above -- NOT one of the 51 categories. You may use any TensorFlow-based convnet classification architecture that you wish, starting from random or pre-trained weights -- your choice. There are several places to possibly start from (you don't have to use any of them):

- Work through the Tensorflow tutorial on using CNNs for the MNIST dataset and then adapt the RGB-D data to be read in by the same network for training. Note that MNIST images are 28 x 28 and grayscale, so your input images would also have to be the same dimenions in order to work with the given network.

- Work through this CIFAR-10 training tutorial and then try to format the RGB-D images so that they can be read in and trained on by the same network (i.e., become 32 x 32 x 3 and have the appropriate directory structure and label files)

- Format the RGB-D images into four subtree directories such that you can fine-tune an existing Inception network on them as described here

There are two main guidelines:

- Instance 1 of every object category (e.g., toothpaste_1, apple_1, etc.) may NOT be used for training (weight learning) or validation (hyperparameter tuning). Rather, it must be used only for testing (aka evaluation) of your final classifier(s)

- If you have limited time, at least learn how to classify the RGB images (color). Otherwise, also try to learn how to classify the depth images (pure shape) alone and present a comparison of how the two approaches worked.

Please submit a 2-page WRITE-UP of your approach and results. What network did you use (citation + link to GitHub or tutorial page you took code from), what modifications (if any) did you make to it, how did you conduct training (how many epochs, how did the loss change, what was the training accuracy), how did you augment or alter the training dataset, and what accuracies were you able to get for classification tasks. Add notes about any issues you encountered or interesting observations you made.